What’s New

This page contains details on all the latest features, improvements, and fixes for Fewlogs. It is arranged chronologically, with the most recent updates listed at the top. If you have any suggestions for improving Fewlogs, please do not hesitate to reach out! We are continually enhancing the tool to better fit our users' needs.

In this release, we're adding support for creating AI Prompts libraries so that you can keep track of your favourite AI prompts - a powerful new way to create and reuse AI prompts. AI prompt templates allow you to define prompt structures with parameters that can be dynamically filled in.

You can specify things like:

- Main action or instruction to run.

- AI Persona or style traits to use with it.

- Rendering format of the text fragments coming from your semantic library.

- Language model or AI Writing tool to run the prompt on.

- Extra context using headers and footers for each prompt part.

Once created, these templates become reusable prompt recipes that you can use again and again. These are great assets to:

- Save time and increase productivity by reusing prompt structures that work well.

- Iteratively improve prompts over time by keeping track of all your prompts.

- Standardize prompts across your team.

To learn more, see our documentation on how to create AI prompt templates in Fewlogs. You can also read more about how to create good AI prompts for your AI workflows.

In this release, we add the capability to create and use AI Personas — generating content in a specific style is a critical component of content generation.

AI personas are writing style profiles that capture the tone, voice, and patterns of existing content. You can use them to influence new generated text to match a brand, industry or business style. To learn more, you can read what are AI Personas and the benefits of using them.

You can create AI personas in Fewlogs by analyzing text samples to auto-generate trait definitions that match the writing style. Check the docs on how to create AI Personas from text samples.

The algorithm that measures how well the text fragments match the search prompt has been improved. Before, it mainly scored fragments relative to each other within one search, to put the best matches at the top of the results. Now, the scores are more absolute, so you can compare match quality across different searches. This gives you a better sense of the overall relevance of the library texts for your prompt, while still ranking the best matches highest in each set of results.

In other words, the algorithm now scores in a more universal way instead of just within each search. This helps you better judge if the library has good content for your prompt, while keeping the top results for each search most relevant.

- The library search UI has been refreshed – now the main actions that can be taken on the results appear in the bottom right corner of the page.

- The "Copy" button on the library search page has been changed to "Build prompt." This copies the search results and starts the process to generate new content. You can now choose how to put the prompt together, pick an AI persona to use, the main action, and what website to open after the prompt is copied to the clipboard.

- Navigating to personas or libraries will load the last visited, instead of a blank screen.

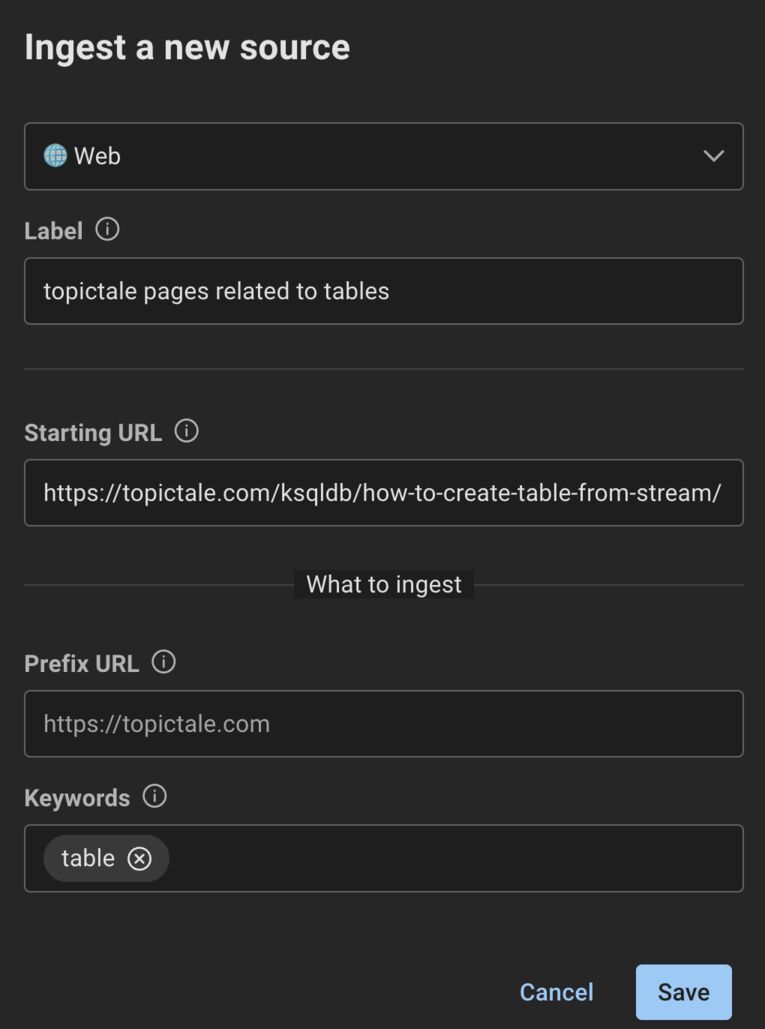

The web ingestor has been enhanced to provide greater control over which pages get ingested. In addition to limiting ingestion by URL prefix, users can now specify a list of keywords - pages containing any of these keywords will be ingested, while other pages will be excluded. This allows precise targeting of ingestion to only pages containing relevant content.

The new keyword-based ingestion aims to improve the flexibility of building topic-specific libraries from larger websites with all sorts of content.

- The interface for configuring source ingestion has been updated to include helpful tooltips and improvements for an enhanced user experience. These UX refinements aim to make the ingestion configuration process more intuitive and streamlined.

- A bug that caused the UI to briefly glitch when navigating between libraries has been fixed. Before the update, the interface would flicker while loading the details for the newly selected library. This issue has been resolved so that library navigation is now smooth without any UI glitches.

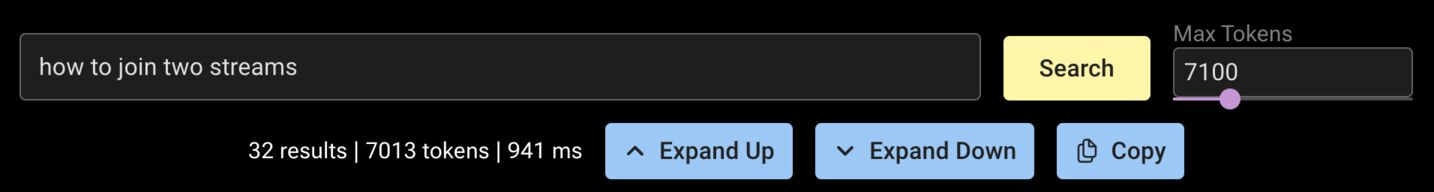

You can now increase or decrease the amount of tokens a particular search will retrieve from your library. This is particularly useful when used with language models with large context windows such as Claude.

You can now ingest YouTube videos with this new ingestor.